Lip-sync Settings

Updated: 01/30/2020

This section explains how to make a model lip-sync using the AudioSource volume.

The following explanation is based on the assumption that the project is the same as the project for which the [Import SDK – Place Models] was performed.

Please prepare a separate audio file in a format that can be handled by Unity in order to obtain and set the volume from AudioSource.

Summary

To set up lip-sync, the Cubism SDK uses a component called MouthMovement.

To set up MouthMovement on a Cubism model, do the following three things.

1. Attach components to manage lip-sync

2. Specify parameters for lip-sync

3. Set component to manipulate values of specified parameters

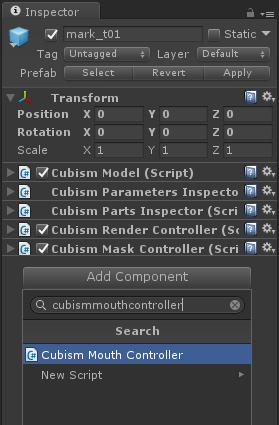

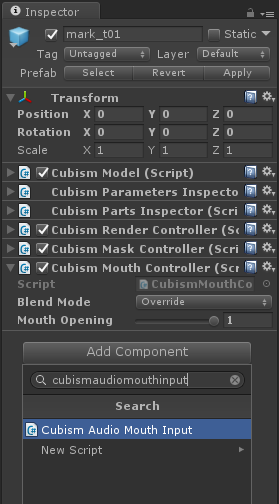

Attach Components to Manage Lip-sync

Attach a component called CubismMouthController, which manages lip-sync, to the GameObject that is the root of the model.

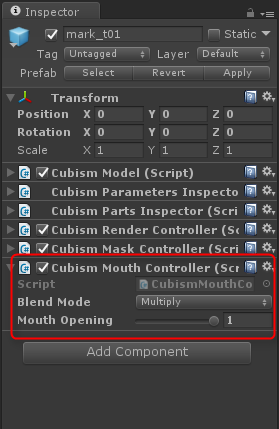

CubismMouthController has two setting items.

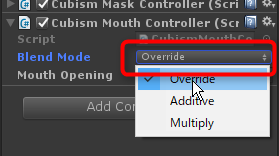

– Blend Mode: Specifies how the Mouth Opening value is calculated for the value currently set for the specified parameter.

Multiply: Multiply the currently set value by the Mouth Opening value.

Additive: Add the Mouth Opening value to the currently set value.

Override: Overwrite the currently set value with the Mouth Opening value.

– Mouth Opening: The value of mouth opening and closing. It is treated as open at 1 and closed at 0. When this value is manipulated from the outside, the value of the specified parameter is also changed accordingly.

For this example, set Blend Mode to [Override].

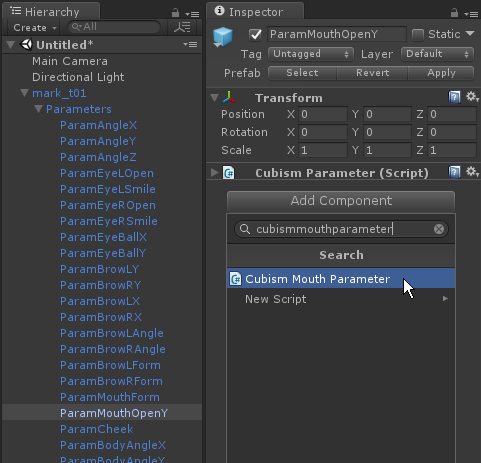

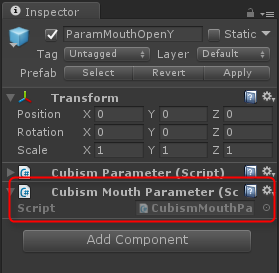

Specify Parameters for Lip-sync

GameObjects that manage the parameters of the model are located under [Model]/Parameters/.

The name set for this GameObject is the ID of the parameter.

The CubismParameters attached to these GameObjects are identical to those that can be obtained with CubismModel.Parameters().

From this GameObject, attach a component called CubismMouthParameter to the one with the ID to be treated as a lip-sync.

With the above settings, the mouth opening and closing operations can be performed.

However, implementing these settings alone still does not cause the model to lip-sync.

For automatic lip-sync, a component that manipulates the Mouth Opening value of the CubismMouthController must also be set.

Set Component to Manipulate Values of Specified Parameters

Attach a component for lip-sync input to the GameObject that is the root of the model.

MouthMovement contains components that manipulate the mouth open/close values from the AudioSource volume and Sine waves as input samples.

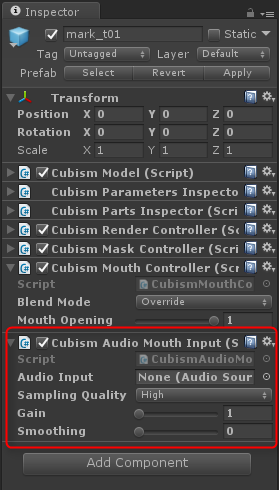

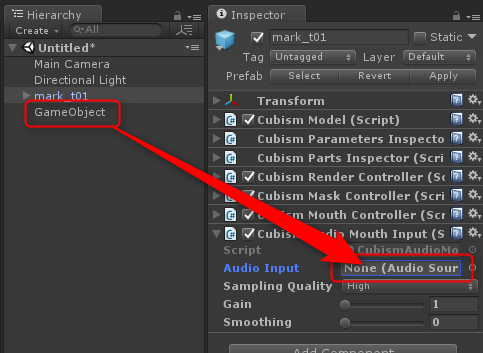

Attach a component called CubismAudioMouthInput so that values can be set from the AudioSource.

CubismAudioMouthInput has the following four settings.

– Audio Input: Sets the AudioSource to be used for input. The volume of the AudioClip set for this AudioSource is used.

– Sampling Quality: Sets the accuracy of the sampling volume. The settings are listed below with the setting having the highest accuracy but also the highest computational load at the bottom.

– High

– Very High

– Maximum

– Gain: Sets the multiplication factor of the sampled volume. With a value of 1, the sampled volume remains as-is (it is multipled by 1).

– Smoothing: Sets how much smoothing is applied to the opening and closing values calculated from the volume. The larger the value, the smoother, but also the higher the computational load.

In this case, the settings are as follows: Audio Input should be set to the GameObject to which the AudioSource is attached.

– Sampling Quality: High

– Gain: 1

– Smoothing: 5

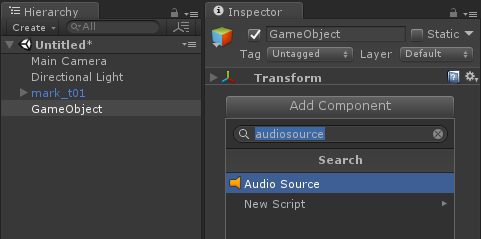

Finally, to get the volume, attach an AudioSource to any GameObject and set it to the Audio Input of the CubismAudioMouthInput above.

This completes the lip-sync setup.

When the scene is executed in this state, the audio file set in AudioSource will be played and the model will lip-sync to the volume.